SPIE's AR/VR/MR All-Day Conference Delivers Immersive Experience

By Ed Biller, Editor, Photonics Online

Every speaker. Every presentation. Attendees filled the chairs, jockeyed for space along the walls, and planted themselves on the floor in the aisles for SPIE’s VR,AR, MR One-Day Industry Conference (and headset demonstrations; read more about that event here), held Jan. 29 as part of SPIE Photonics West at San Francisco’s Moscone Center.

Additionally, after feasting on a full day of insight from some of the most prominent names in altered and virtual reality technologies, conference attendees were treated to a panel discussion that assembled pioneers in the space, asking them to muse on its past, present, and future.

Help Wanted: Smaller, Faster, Sleeker, And More Immersive

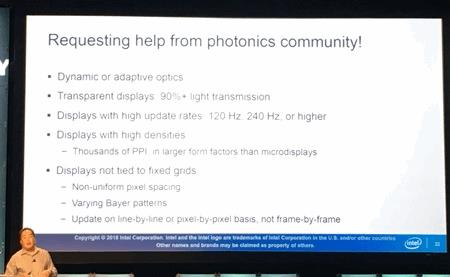

Ronald Azuma — whose team at Intel Labs “designs and prototypes novel experiences and key enabling technologies to enable new forms of media” — kicked off the conference by discussing the hardware challenges that AR/VR developers face, as well as what is needed from the photonics community to move such technology forward.

Jerry Carollo, an optical architect at Google, said his company’s management has been crystal clear on its desires for Google’s VR/AR/MR technologies.

“[They want] 180-degree viewability, 20/20 visual acuity, and [a product weighing] less than 90 grams,” Carollo said. “However, in optics, there’s no free lunch, and there’s no Moore’s law.”

He spoke of the difficult balance that must be achieved to make head-mounted display (HMD) design attractive to consumers. Developers must consider weight, center of gravity, comfort, and, frankly, whether the device “looks cool.” Additionally, there are lesser-thought-of considerations, like ease of donning/taking off the gear, whether it is gender friendly, and which features should be adjustable.

“Every time you add an adjustment, you add weight and reduce comfort,” Carollo said.

Doug Lanman, Director of Computational Imaging at Oculus Research, discussed the hardware challenges of computational displays. While higher resolution and wider fields of view topped his wish list, Lanman called vergence accommodation conflict “a challenge that researchers can afford to work on.”

He said that current VR headsets mostly use “fixed focus” — which keeps everything in the image in focus — but countered that some blur is desirable, because it adds to the realism of what’s depicted. To achieve this optically, Lanman said at least two elements are required, both of which must be contained in a small package: an eye tracker that works for everyone, and real-time rendering algorithms.

“You have to look at the overall system,” he said. “How does it represent natural images?”

Oculus’ answer to locally vary focus, which has reached the research prototype stage, is a “digital compound lens,” which Lanman said works, but is very early in development. He added that creating enough planes to represent depth is an ongoing challenge.

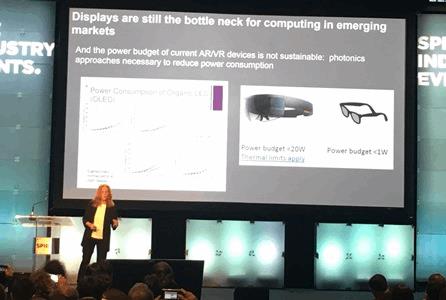

Underlying these hardware challenges is the constant demand that devices provide more capability while using less power.

“Most of the world can’t have high-fidelity computing because of the power requirements. It’s not just about VR/AR,” said Tish Shute, director of AR/VR/MR Corporate Technology Strategy for Huawei USA. She added that optical metalenses and metasurfaces are a huge advance in the right direction toward solving this issue.

The conference’s speakers addressed eye and hand-tracking sensors, as well.

Timothy L. Wong of 3M’s Electronics Materials Solutions Division laid out VR industry needs for eye tracking, including accurate gaze-based user input, emotion cognition, and dynamic focus. He also echoed earlier speakers’ desire to eliminate vergence accommodating.

Near-infrared (NIR) mirrors currently comprise the most common solution to eye-tracking in VR/AR headsets.

Meanwhile, Yiwen Rong, VP of Product Development for uSens, reinforced the point that a device can have the best resolution and comfort but, if your sensors stink, it’s all for naught.

AR solutions are overtaking pure VR in market value, Wong explained, and consumers will demand untethered movement and accurate hand tracking in all scenarios — including gesture communication, and both direct and indirect manipulation of the environment.

Ask The Pioneers

The all-day AR/VR/MR conference culminated in a panel session titled “VR/AR/MR: Yesterday, Today, And Tomorrow.” Bernard Kress, a Partner Optical Architect at Microsoft / Hololens, moderated the forum, along with Leo Baldwin, a principal engineer with Amazon.

The panelists discussed hardware and software challenges, consumer trends, and even delved into the philosophical and moral implications of technologies now being created. The panel included:

- Jaron Lanier, a VR pioneer, computer philosophy writer, computer scientist, visual artist, and composer

- Ronald Azuma, a pioneers/innovator in augmented reality, visualization, and mobile applications at HRL Labs, Nokia Research Center, and Intel Labs

- Thad Starner, an early smartglasses pioneer — who wore a pair of smartglasses throughout the panel discussion — as well as the founder and director of the Contextual Computing Group at Georgia Tech's College of Computing

- Mark Bolas, a VR pioneer currently working on the "vision strategy" for Microsoft's Mixed Reality platform

- Marty Banks, a vision specialist for HMDs, studying the effects emerging technology, screens, and virtual reality headsets have on our eyes.

- David Chaum, an AR hardware developer, recognized as the inventor of digital cash and other fundamental innovations in cryptography, including privacy technology and secure election systems

- Jim Melzer, one of the pioneers for defense and military HMDs

Meltzer was asked to kick off the panel discussion, having been involved in the early days of military HMD development.

“We’ve learned a lot about display technology, tracking technology… and a whole lot about human factors,” Melzer said. As an example of the latter, he cited color research conducted for AR displays, looking at what might show up best against different backgrounds (e.g., sky, concrete, dense foliage).

“What I think is one of the coolest display technologies is waveguide/lightguide technology,” he added. “We now have the tools to be able to do this efficiently.”

Melzer noted that VR/AR technology started with simply tracking straight ahead, in a cockpit, then progressed to tracking in a “cave” (an enclosed environment). Its next logical progression is completely untethered tracking.

However, “we really need to be concerned with the focal environment we create,” Banks added, noting that a lack of binocular vision in early AR/VR technologies led to eye strain — which, at times, was misidentified and demonized as brain damage from the displays. Myopia (nearsightedness) diagnoses also have been laid at VR/AR displays’ doorstep in the past, thought the connection is murky; myopia generally forms at a young age, and diminishing vision often accompanies the aging process.

Monocular display technologies, said Starner, are “better, and safer, in my opinion, than a transparent display.”

Lanier had a good laugh reminiscing about those flops, calling the fact that so many consumers bought the Power Glove “incredible.” But that isn’t to imply he takes VR/AR technology — both its promise and its shortcomings — lightly: Lanier helped create that first VR surgical simulator, and he stoically told the audience how his wife’s life was saved by a surgeon who had trained for the procedure in a related advanced simulation.

More than 20 years later, Lanier and others were asked to contemplate aloud the moral and philosophical conundrums that modern VR/AR has created, among these privacy, disparity, and our very humanity.

Much like the television and the cell phone, one common fear surrounding AR/VR is that it will draw people deeper into their own little worlds, diminishing their connection to others.

“What I’m hoping is that these technologies help us to better connect to the real world [rather than the opposite],” said Starner. Lanier added that, while fabricated images can be fun, VR should help people appreciate the depth of actual human perception, which technology simply is unable to capture.

Regarding privacy, Chaum noted that the discussion around privacy and computing is 30 years old, but bringing artificial intelligence (AI) into the developmental fold makes things much more frightening.

“Computers can know more about you than other people know, more than you even know about yourself,” he said. Chaum also said that emotion tracking could prove to be intrusive, despite its developers’ intentions.

“Privacy will not only be a first-rate technology advantage,” Starner added, “but a first-rate corporate/business advantage.”

Regarding VR/AR’s best applications, now and in the future, the panel had no shortage of ideas, but most speakers balked at the thought of “hard AR” — where virtual objects occlude the real world.

“It’s just not ethical yet,” Lanier said, explaining that a person who sees something, and doesn’t know if it’s real, didn’t sign up for that experience and is being deceived. Starner used the example of a Windows error message following you around the room in labeling ‘hard AR’ a “tough sell.”

Still, VR/AR applications in industry, tourism, gaming, and software development abound.

Starner also brought up the idea of a world — let’s downsize that to the neighborhood you live in, for the sake visualization — in which most signage has been “decluttered,” replaced by information imparted by the display in one’s smartglasses.

“I think one of the great missions of VR/AR is to spare the world from stupid stuff,” Lanier added, to applause.

Audience questions followed the panel discussion, including a query regarding whether VR/AR technologies would create a new dynamic of haves and have-nots — the “have-nots” being those unable to afford or learn the new technology. Azuma was quick to respond, recommending the audience read Vernor Vinge’s Rainbow’s End, set in a world where augmented reality is ubiquitous, with all the perils that implies.

Other panelists agreed to put some thought into the topic, and before dispersing were asked what else might be needed to keep VR/AR development rolling in the right direction. Brighter OLEDs? Lower power lasers? More affordable metamaterials?

“Yes!” was the consensus answer to all. “And better batteries,” added Lanier, “or everything has to be that much more efficient.”