The Driverless Car Revolution Creates Massive Opportunities For Optics

By Sabbir Rangwala, Patience Consulting

About 90 million new cars are sold globally per year, driving a massive economy (~$3T/year in new car sales alone). But, it is a brutally competitive, cyclical, capital-intensive, and risky endeavor.

Shared transportation is disrupting the traditional model of car ownership, and how people choose to move from point A to point B. Companies like Uber, Didi, Lyft, and others have essentially made transportation an app-based utility, providing tremendous convenience, increased productive (or meditation!) time, reduced stress, and lower traffic and parking congestion. New business models are emerging around the shared transportation space which, if exploited correctly, can produce massive economic and competitive value. Players in this space who do not have a strategy around these trends will, unfortunately, become extinct or shrink into niche players.

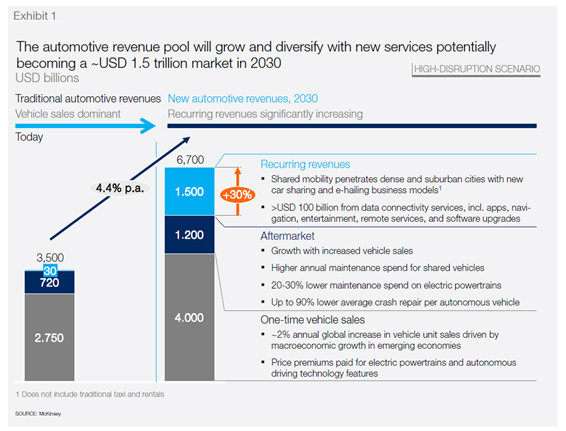

A study by McKinsey1 predicts that the shared transportation economy will increase recurring revenues from ~$30B/year today to $1.5T/year by 2030. Different players in the transportation ecosystem are vying to control this extremely lucrative revenue (operating margins of 15-20 percent, versus current margins of 5 percent for new car sales), including traditional automotive players (OEMs and Tier 1 suppliers), as well as technology and ride-sharing companies.

Fig. 1 — Recurring Automotive Revenue Pool

These revenue streams and their profitability rely on the practical implementation of driverless cars, since human drivers today cost about 50-60 percent of the total revenues generated by ride-hailing entities like Uber and Lyft (none of the of the major ride-hailing companies today are profitable, with Uber alone losing about $1B/quarter). There are significant ongoing efforts and investments in the driverless car space, initially spurred by the DARPA Grand Challenge in the mid-2000s.

The DARPA Grand Challenge was a grand challenge in every sense of the word, bringing together luminaries in fields as varied as computer science, sensing, automotive engineering, and robotics from academia, car manufacturers, and technology companies. The experience germinated ideas of how such technology could radically change the business of transportation, and how these different organizations could work together. Google aggressively invested in this effort, and its driverless car division (now called Waymo) has been working on this technology for close to a decade, with > $1B invested, and significant progress to date. Today, most players in the transportation ecosystem are pursuing the holy grail of driverless cars, because the financial and business impacts are substantial.

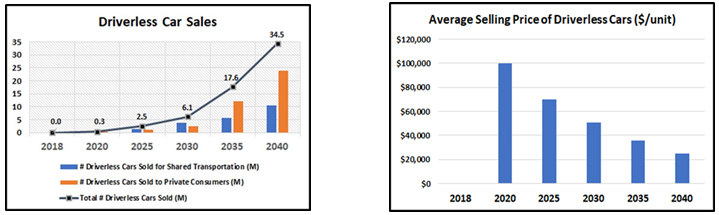

Fig. 2 shows the number of driverless cars sold/year, projected based on studies by Mckinsey1 and Goldman Sachs2:

Fig. 2 — Driverless Car Sales and Selling Price

Initial sales of driverless cars are expected to be dominated by shared transportation needs, where the car is viewed as a capital investment, with very high utilization rates and entities able to afford the higher selling price. Beyond 2030, private sales of driverless cars start dominating as price points become more affordable for consumers (who own cars for personal convenience, with very low utilization rates). In 2025, the total number of driverless cars is projected at 2.5M, with a total car sales revenue of $175B.

The Role Of Sensors In Perception

Driverless cars need two critical capabilities to ensure safe, efficient, and comfortable ride quality to their customers:

-

Perception — provides the computer with data describing exactly what objects are around the local vicinity of the car, as well as other structures and information, such as traffic lights, road markings, and traffic signs

-

Path planning and control — uses perception data (in conjunction with other data, like maps and routes) to localize the car position, predict traffic flow, plan the path of the car, and apply the control action (accelerate, steer, brake, park).

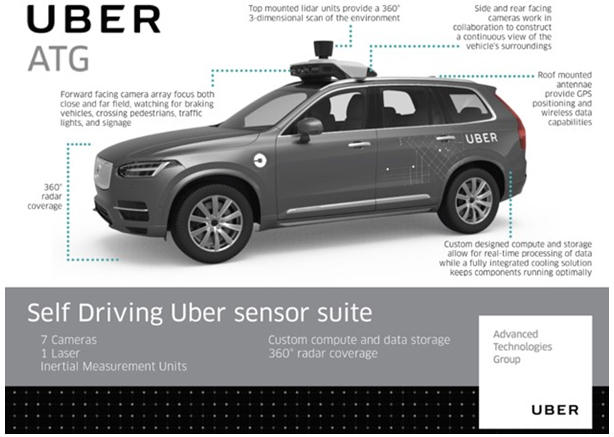

The perception function is safety-critical, and is enabled by an array of sensors that provide a 360-degree view around the car. Fig. 3 shows a typical sensor suite used today for testing the performance of driverless cars, consisting of mono and stereo cameras, radar, ultra-sonics, IMUs, and LiDAR. The specific number of sensors and their location will vary among companies as the technology gets realized into product versions. Sensors such as cameras and radars are fairly mature, since they have been deployed in regular cars for over a decade. However, LiDAR is different.

LiDAR as a technology is fairly mature for other applications (space, aerial mapping, defense, surveillance), but its deployment in the automotive arena is very new. Almost all companies engaged in driverless car research believe that the 3D depth information provided by LiDAR is critical for the perception function (radar cannot provide this information at reasonable resolution; stereo cameras do not have the range, and also have degraded performance in light-starved conditions). Tesla is an exception, in that they do not believe LIDAR is required, and that autonomy can be achieved purely with cameras, radar, and intelligent computing. But this remains to be proven, since a majority of successful driverless car trials, to date, have deployed LiDAR. Elon Musk has promised to have a driverless Tesla demo by mid-20183.

Fig. 3 — Sensor stack as part of a Perception System

The Role Of Optics In Driverless Cars

Given the almost universal acknowledgement that LiDAR is a critical technology for safe and efficient implementation of driverless cars, there is significant investment in this area, with over 30 companies engaged in this endeavor globally (primarily in the United States and China, with surprisingly very limited activity in Europe, Japan, and South Korea).

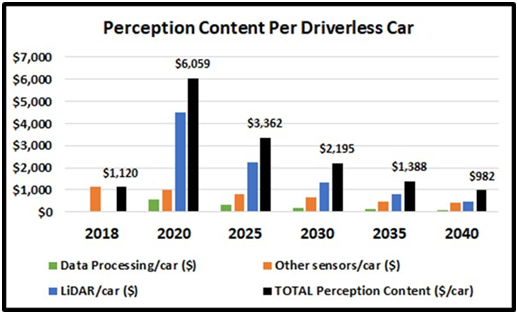

In terms of the business case (as described in Fig. 2), by 2025 we expect a total of 2.5 million driverless cars, with a total revenue of $175B. The driverless module functionality is estimated to account for about 10 percent of the vehicle’s sales price (similar to a very high-end feature), with the perception stack occupying about 5 percent, for a market opportunity of $8.5B.

Citibank4 provides cost estimates of cameras, radar, ultra-sonics, and IMUs, based on which the affordable LiDAR content per car can be estimated (Fig. 4). LiDAR content per car drops from $4.5K in 2020 to $2.2K in 2025 as the technology matures and the volume of driverless cars increases. The total market opportunity for LiDAR increases from $1.3B in 2020 to $5.5B in 2025.

It should be noted that public pronouncements of a $200 LiDAR by 2021 are not consistent with financial models of driverless cars, their role in a shared transportation economy, the kind of performance requirements that LiDARs need to meet, or the volumes and manufacturing experience required to achieve such price points. It is true that, once driverless cars become highly commoditized and driven by the private consumer market, the LiDAR content/car needs to start approaching similar levels, like radar and cameras today. But, this is not expected to occur for the next couple of decades, and only when the volume of driverless cars sold/year is in the 15-20M range.

Fig. 4 — Perception Content Per Driverless Car

The BOM (Bill of Materials) for an automotive LiDAR is composed of the following functional blocks:

-

Laser source

-

Detector

-

A method for scanning optical energy to and from the scene

-

Micro-optics for beam shaping and focusing

-

Bulk optics

-

Thermal management

-

Mechanics

-

Electronics

-

Computing/DSP

Blocks one through five sit clearly in the expertise domains of optics companies, and occupy a significant portion of the LiDAR revenue stream discussed above. Assuming optics occupies at least 50 percent of the BOM, the revenue opportunities for optics suppliers is significant — in the order of $700M in 2020, and growing to $3B by 2025.

Of course, optics companies that choose to develop the entire LiDAR system can capture the full revenue opportunity, and produce better margins because of vertical integration. Given the strong coupling between the optics technology and LiDAR performance, current optics players (especially the ones dominating the laser and detector supply) are best suited to producing the highest-performance LiDARs for automotive deployment.

Challenges For Optics Companies Engaging In Automotive LiDAR

The biggest challenge optics companies face today in penetrating the lucrative market afforded by driverless cars is one of strategy, and pivoting from their existing business. The legacy of most optics companies is in the telecommunication, defense, space, and material processing industries, which are extremely competitive, approaching commoditization, but are viewed as lower risk. On one hand, the automotive business looks very compelling; on the other, breaking out of existing business challenges and focusing resources and mindshare into this new domain is not easy. But many companies are starting this journey (although reactively, rather than proactively). They also are in the decision-making stage of whether to be a broad-based component supplier or a LiDAR system provider.

A second challenge is the sheer diversity and fragmentation of current LiDAR technologies — in terms of operating mode, wavelengths, scanning architectures, laser technology, and detector technology. Unlike the telecom industry, no industry standards exist around LiDAR. Optical component players have to invest in understanding the space so that they can proactively focus resources on approaches that are most likely to succeed. This is not straightforward, since it needs multiple domains of knowledge across industries and technologies.

Cost and reliability are additional challenges and — given the safety assurance aspect of the perception stack in general, and LiDAR in particular — the ability to balance cost, reliability, performance stability, and operation over diverse weather, temperature, cleanliness, and moisture conditions is critical. Optics companies are best positioned to achieve these metrics, because they have the track record and the demonstrated capabilities of having done this in other markets.

About The Author

Sabbir Rangwala founded Patience Consulting, LLC in November 2017. He specializes in perception, sensing, and machine learning for movement automation. Prior to this, he was President and Chief Operating Officer of Princeton Lightwave, where he successfully pivoted the company from a defense component supplier to a leading automotive LiDAR player, resulting in an acquisition by Argo.ai. Prior to this, Sabbir worked in various leadership roles in the optics arena for 25 years.

He can be reached at srangwala@verizon.net

References:

- Mckinsey, “Automotive revolution – perspective towards 2030”, January 2016

- Goldman Sachs, “Rethinking Mobility”, May 2017

- https://techcrunch.com/2018/02/07/elon-musk-expects-to-do-coast-to-coast-autonomous-tesla-drive-in-3-to-6-months/

- Citibank, “Car of the Future V3.0”, November 2016